TL;DR

The crawl-delay directive is a non-standard rule you can add to a robots.txt file to ask search engine crawlers to wait a specific number of seconds between page requests. Its primary purpose is to reduce server load. However, the most critical fact to understand is that Googlebot completely ignores this directive. While other crawlers like Bing and Yahoo respect it, managing Google's crawl rate must be done through Google Search Console.

What Is the Crawl-Delay Directive in Robots.txt?

The crawl-delay directive is a command placed in a website's robots.txt file to manage how frequently a web crawler can access a server. In essence, it's a way to tell compliant bots to slow down. The main reason a webmaster would use this directive is to protect their server from being overwhelmed by an excessive number of requests in a short period, which can slow down the site for human users or even cause it to crash. This is especially relevant for sites on shared hosting or with limited server resources.

The syntax for the directive is straightforward. It is placed within a user-agent block and specifies a time in seconds. For example:

User-agent: *

Crawl-delay: 10In this example, the asterisk (*) applies the rule to all crawlers, and the value 10 requests that they wait 10 seconds between each page crawl. If you wanted to target a specific bot that was causing issues, you could replace the asterisk with that bot's user-agent, such as bingbot.

It is crucial to recognize that crawl-delay is not part of the original Robots Exclusion Protocol and was never officially standardized. As a result, its support and interpretation vary significantly among different search engines, which leads to the most important consideration: which crawlers actually follow the rule. Using it without understanding this nuance can lead to ineffective crawl management.

How Search Engines Handle Crawl-Delay: Google vs. Other Bots

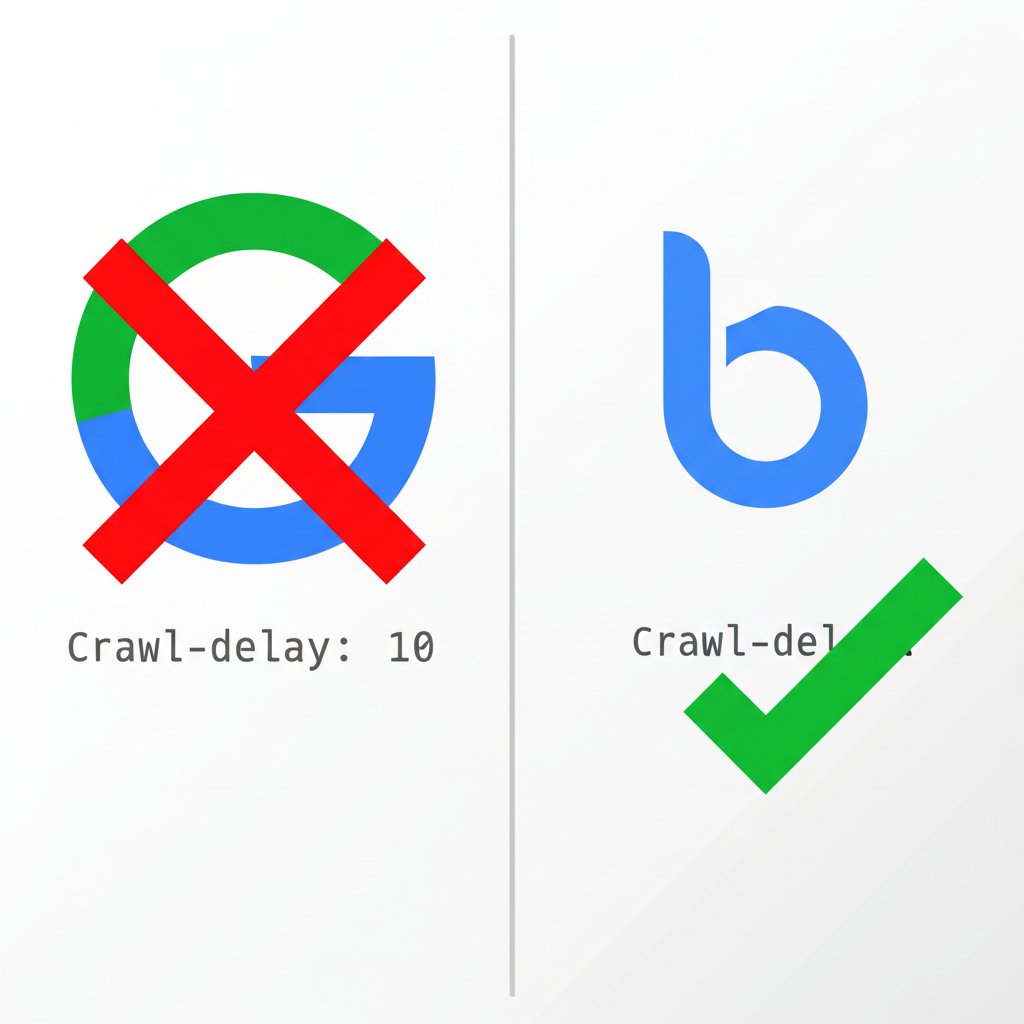

The effectiveness of the crawl-delay directive depends entirely on which search engine's bot is visiting your site. Support is inconsistent, and the difference between how Google handles it versus other major search engines is a critical distinction for any SEO professional or webmaster.

Most importantly, Googlebot does not support the crawl-delay directive. According to Google's official documentation, its crawlers will simply ignore the line. If your goal is to reduce the rate at which Google crawls your site, adding a crawl-delay to your robots.txt file will have no effect. The correct method for managing Googlebot's crawl rate is to adjust the settings directly within your Google Search Console account. This tool allows you to request a lower crawl rate if Google's crawling is causing server issues.

Conversely, other major search engines like Bing and Yahoo do respect the directive. As explained by sources like Conductor, they interpret Crawl-delay: 10 by dividing the day into 10-second windows and crawling a maximum of one page within each window. Yandex also supports the directive but interprets it as a simple 10-second wait between fetches. This makes the directive a valid, though blunt, instrument for controlling the crawl rate of these specific bots.

To clarify these differences, here is a summary of how major search engines handle the crawl-delay directive:

| Search Engine | Supports Crawl-Delay? | Recommended Control Method |

|---|---|---|

| No | Crawl rate settings in Google Search Console | |

| Bing | Yes | Robots.txt directive or Bing Webmaster Tools |

| Yahoo | Yes (follows Bing's engine) | Robots.txt directive |

| Yandex | Yes | Robots.txt directive or Yandex.Webmaster |

| Baidu | No | Baidu Webmaster Tools |

The practical takeaway is clear: do not rely on crawl-delay to manage Google's traffic. For issues with Googlebot, Google Search Console is the only effective solution. For other bots like Bing, the directive remains a functional tool for throttling crawl speed.

Best Practices for Using Crawl-Delay (When Appropriate)

Given that crawl-delay is only effective for certain crawlers, it should be used surgically and with a clear purpose. It is not a general-purpose tool for SEO but a specific solution for server load issues caused by non-Google bots. If you've identified an aggressive crawler overwhelming your server, implementing a targeted crawl-delay can be an effective strategy.

When choosing a value, it's important to understand the trade-offs. A value of 1 or 2 is a relatively gentle request to slow down, while a value of 10 is quite aggressive and can dramatically reduce the number of pages a bot crawls per day. As Yoast points out, a 10-second delay limits a crawler to just 8,640 pages per day. While this might be acceptable for a small site, it could significantly hinder the indexing of a larger one. The primary pro is protecting server resources, but the con is potentially slowing down how quickly your content is discovered and updated in search engines like Bing.

As sites grow and scale their content production, sometimes with the help of modern tools like AI writers, managing crawl frequency becomes increasingly important to ensure server stability. While a tool like BlogSpark can help revolutionize a content workflow, the resulting increase in pages makes it vital to monitor crawler impact. If you determine a crawl-delay is necessary for a specific, non-Google bot, follow these steps:

- Identify the Aggressive Bot: Analyze your server logs to pinpoint the exact user-agent of the crawler that is causing high server load.

- Add a Targeted Directive: Open your robots.txt file and add a new block specifically for that user-agent. For example, if Bingbot is the issue, your entry would look like this:

User-agent: bingbot Crawl-delay: 2 - Set a Conservative Value: Start with a low value, such as 1 or 2 seconds. This is often enough to alleviate server strain without drastically impacting your site's visibility on that search engine.

- Monitor and Adjust: After implementing the change, continue to monitor your server logs to confirm that the bot's crawl rate has decreased and that server performance has improved. Adjust the value if necessary.

By using crawl-delay as a targeted, measured tool, you can protect your server's health while minimizing any negative impact on your site's presence in the search results of compliant engines.

Frequently Asked Questions About Crawl-Delay

1. What is a good crawl delay?

There is no single "good" value for crawl-delay, as it depends entirely on your server's capacity and the aggressiveness of the crawler. A good starting point is a conservative value like 1 or 2 seconds. According to Rank Math, Bing suggests a range between one and 30 seconds. It's best to start low, monitor the impact on your server, and only increase the delay if the load issues persist.

2. What does crawl delay 10 mean?

A directive of Crawl-delay: 10 instructs compliant bots to wait 10 seconds between crawling pages on your site. For search engines like Bing and Yahoo, this means they will crawl a maximum of one page in any 10-second period. This significantly throttles their crawl speed, limiting them to a theoretical maximum of 8,640 pages per day, which can severely slow down the indexing of large websites.

3. How do I add a crawl delay?

You add the crawl-delay directive to your website's robots.txt file, which is located at the root of your domain (e.g., `yourdomain.com/robots.txt`). Place it under the `User-agent` line for the bot you want to target. For example, to slow down all bots, you would add: `User-agent: *` followed by `Crawl-delay: 5`. Remember, this will not work for Googlebot; to manage Google's crawl rate, you must use the settings in Google Search Console.

4. Does robots.txt prevent crawling?

The robots.txt file is a set of directives, not enforced rules. While major search engines like Google and Bing respect rules like `Disallow`, malicious bots or less common crawlers may ignore them completely. The `Crawl-delay` directive only slows down compliant crawlers; it does not prevent them from accessing pages. To prevent a page from being indexed by Google, you should use a `noindex` meta tag, which requires that the page remains crawlable.