What is Crawling in SEO and Why It Matters

The Foundation of Search Engine Visibility

Imagine you walk into the world’s largest library—a place where new books appear every minute and shelves stretch endlessly in every direction. Now, picture a dedicated librarian whose job is to discover every new book, read its summary, and decide where it belongs so visitors can find it later. This is exactly how search engine crawling works in the digital world. The librarian is like a search engine crawler, and your website is one of those countless books waiting to be discovered and cataloged.

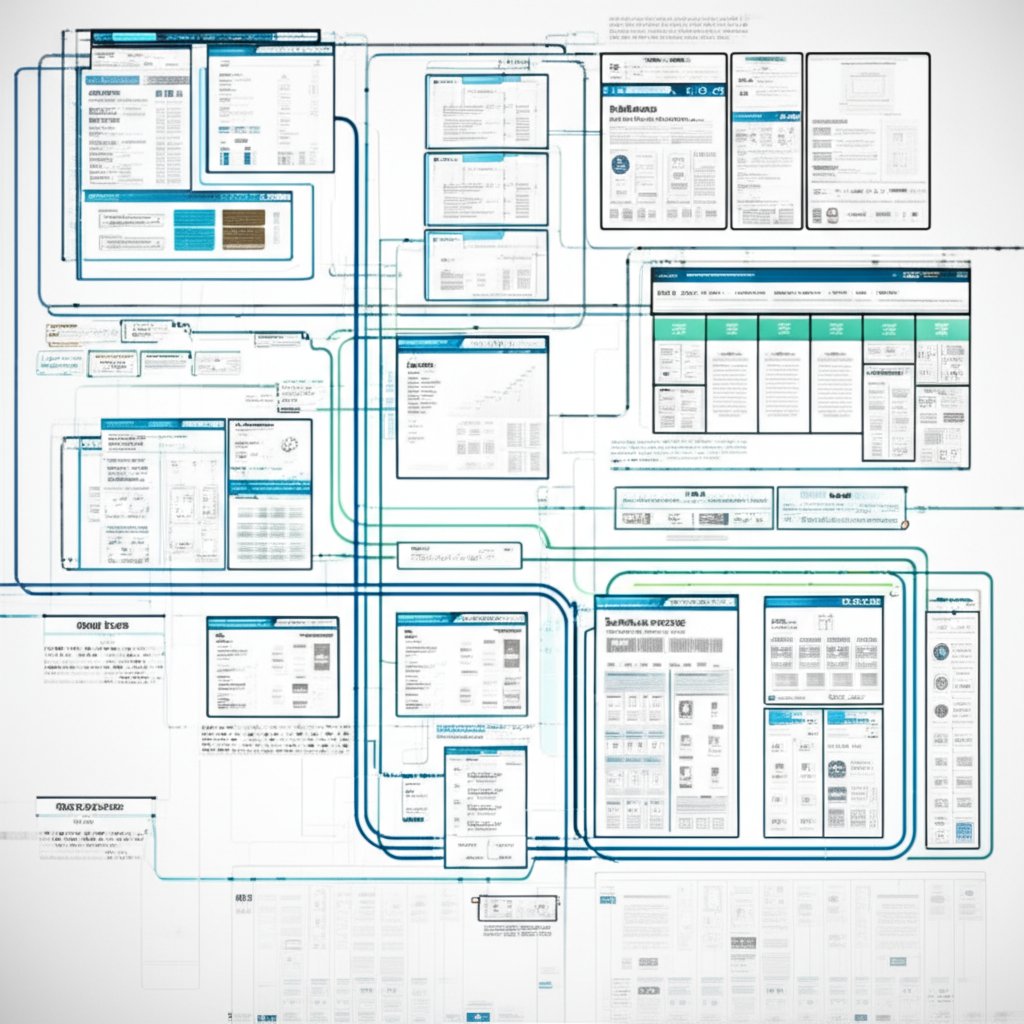

So, what is crawling in SEO? In simple terms, crawling is the process where search engines send out automated bots—often called spiders or crawlers—to systematically browse the web and find new or updated content. These crawlers start with a list of known web addresses and follow links from one page to another, much like moving from one book to the next through a series of references or footnotes. As they travel, they gather information about each page, such as its content, structure, and how it connects to other pages on your site and the wider web.

Why Crawling is Step One for SEO

Sounds complex? Actually, the idea is straightforward: If your website isn’t crawled, it won’t be seen by search engines. Crawling is the very first step in the SEO process. Without it, the rest—indexing (where your content is stored and organized) and ranking (where your pages appear in search results)—simply cannot happen. In other words, a page that isn’t crawled is invisible to anyone searching for it online.

If a search engine can't crawl your page, it's as if it doesn't exist.

This is why understanding what is meant by crawling in SEO is so important for anyone who manages a website. Whether you’re running a personal blog or a large e-commerce store, ensuring your site can be efficiently crawled is the foundation for all your future SEO success. In 2025 and beyond, mastering the basics of crawling means giving your content the best chance to be found, indexed, and ranked by search engines.

Crawling vs Indexing

Crawling vs Indexing: A Critical Distinction

When you first hear the terms "crawling" and "indexing" in SEO, they might sound interchangeable. But if you want your website to shine in search results, understanding the difference is crucial. So, what is the difference between crawling and indexing in SEO? Let’s break it down with a simple analogy.

Imagine you walk into a kitchen to prepare a meal. First, you gather all the ingredients from the pantry and fridge—that’s crawling. Next, you organize those ingredients into a recipe, deciding what goes where and how it all fits together—that’s indexing. Both steps are necessary, but they serve very different purposes.

| Process | Crawling | Indexing |

|---|---|---|

| Goal | Discover new and updated pages across the web | Analyze, organize, and store page content in the search engine’s database |

| What it does | Search engine bots systematically follow links to find web pages | Content from crawled pages is processed, categorized, and added to the search index |

| Analogy | Finding all the ingredients in a kitchen | Organizing ingredients into a recipe book |

| SEO Implication | If a page isn’t crawled, it can’t be indexed or ranked | If a page isn’t indexed, it won’t appear in search results |

How Discovery Leads to Storage

Here’s how the process works in practice: when a search engine’s crawler (like Googlebot) discovers a page, it collects information about it—such as text, images, and links. This is the crawling phase. If the crawler can access the page without any barriers (like a restrictive robots.txt file), the next step is indexing. During indexing, the search engine analyzes the content, determines what the page is about, and stores it in a giant database called the index.

It’s important to note that not every page that gets crawled will be indexed. Search engines may skip pages they view as duplicate, low quality, or blocked by site settings. Only indexed pages can appear in search results, so both steps are essential for SEO success (learn more).

This distinction forms the backbone of what is crawling and indexing in SEO. Without discovery, there’s nothing to analyze. Without analysis and storage, there’s nothing for users to find. And ultimately, the process doesn’t stop here—ranking comes next, deciding which indexed pages appear first for a search query. Understanding what is crawling indexing and ranking in SEO helps you optimize every stage for better visibility.

Next, let’s meet the digital “spiders” that make crawling possible and explore how they navigate the ever-expanding web.

Understanding the Role of a Search Engine Crawler

Meet the Spiders: Googlebot and Friends

Ever wondered how search engines actually find your website among the billions of pages online? That’s where the “crawler”—also called a spider or bot—comes in. In SEO, a crawler is a specialized software program designed to automatically browse the web, discover new and updated content, and gather data for search engines to process. When you hear terms like Googlebot (for Google), Bingbot (for Bing), or even DuckDuckBot (for DuckDuckGo), these are all examples of web crawlers at work (source).

Think of a crawler as an incredibly efficient librarian. Instead of walking through library aisles, it moves from one web page to another, following links like stepping stones. Its mission: to find and catalog as much information as possible so that search engines can deliver relevant results to users. Without these bots, search engines would be lost in the chaos of the web, unable to organize or retrieve content efficiently.

How Crawlers Navigate the Web

But what is web crawler in SEO practice? The crawling process begins with a seed list—a set of known URLs. The crawler visits each URL, scans the page, and extracts every link it finds. Each new link is added to a queue of pages to crawl next. This process repeats over and over, allowing the crawler to discover millions of interconnected pages. The web is constantly evolving, so crawlers revisit pages at intervals to check for updates or changes.

- Discovery: Crawlers start with a list of known URLs and expand their reach by following links on each page.

- Extraction: Every link found on a page is added to a growing list of pages to visit next.

- Revisiting: Crawlers periodically return to pages to ensure search engines have the latest information.

- Obeying Rules: Most reputable crawlers check for a website’s

robots.txtfile, which provides instructions about which pages or sections should not be crawled.

Different types of crawlers exist for different purposes. For example, Googlebot is actually split into Googlebot Desktop and Googlebot Mobile, ensuring that both desktop and mobile versions of sites are crawled. Other bots focus on images (Googlebot Image), videos (Googlebot Video), or even AI-driven content discovery. Each crawler may have its own set of priorities and rules, but their main job remains the same: to help search engines map and understand the vast digital landscape (see details).

Understanding what is crawler in SEO and how these bots operate is crucial for site owners. If your website is easy for crawlers to access and navigate, you increase your chances of being discovered, indexed, and ultimately ranked. Next, let’s explore why managing your site’s crawl budget is essential for making the most of every visit from these digital spiders.

The Concept of Crawl Budget Explained

What is Crawl Budget and Why Should You Care?

When you think about search engines crawling your website, you might imagine an endless stream of bots visiting every page, every day. But in reality, search engines have limits. So, what is crawl budget in SEO? Crawl budget is the number of pages a search engine, like Google, will crawl on your website within a specific timeframe—often measured per day or per month. If your site has more pages than your crawl budget allows, some pages may not get crawled or indexed, which can hurt your site’s visibility in search results.

For most small and medium websites, crawl budget isn’t a pressing concern because search engines are efficient at finding and crawling all the important pages. But for large websites—think e-commerce stores, news portals, or sites with tens of thousands of pages—crawl budget becomes a critical factor. If search engines spend too much time on unimportant or duplicate pages, they might miss out on new, updated, or valuable content that you want users to find.

Factors That Influence Your Crawl Budget

Sounds complex? Let’s break it down. Crawl budget isn’t a fixed number—it changes based on several key factors:

- Site Health and Speed: Fast-loading, error-free websites get crawled more efficiently. If your site is slow or returns frequent server errors, search engines will limit how many pages they crawl to avoid overloading your server.

- Authority and Popularity: Sites with higher authority—measured by backlinks and overall reputation—tend to receive a higher crawl budget. Pages that are linked to more often, both internally and externally, are prioritized for crawling.

- Internal Linking Structure: Well-structured internal links help crawlers discover more pages efficiently. Orphan pages (pages with no links pointing to them) are often ignored, wasting your crawl budget.

- Duplicate Content and Non-Indexable Pages: If your site has lots of duplicate or low-value pages, search engines may waste crawl budget on them instead of focusing on your most important content.

- Server Capacity: If your server can’t handle too many requests, search engines will reduce their crawling frequency to avoid causing problems.

Imagine two websites:

- Site A: Fast, well-structured, with clear internal links and few errors.

- Site B: Slow, with broken links, frequent server errors, and lots of duplicate or low-value pages.

Googlebot will allocate more crawl budget to Site A because it can efficiently discover and process more valuable content without running into roadblocks. Site B, on the other hand, will see limited crawling—important pages might be missed simply because the crawler spends too much time dealing with errors or irrelevant content. This is why what is limited crawling in SEO often comes down to technical and content quality issues.

Optimizing your site's performance is a direct investment in your crawl budget.

Understanding Crawl Depth and Its Impact

Another important concept is crawl depth in SEO. This refers to how many clicks away a page is from your homepage or main navigation. Pages buried deep within your site’s structure are less likely to be crawled frequently, especially if your crawl budget is already stretched thin. Keeping your most important content within a few clicks from the homepage ensures it gets the attention it deserves from search engine crawlers.

By understanding and optimizing your crawl budget, you ensure that search engines focus on your most valuable content, improving your chances of ranking well. Next, we’ll look at the technical issues that can block crawlers and how to spot them before they impact your site’s visibility.

Identifying Common Crawl Errors and Issues

Common Roadblocks for Search Engine Crawlers

Ever wondered why some of your web pages are missing from search results? Even with great content, technical crawl issues can make your site invisible to both search engines and users. Let’s break down the most frequent crawl errors and why they matter for anyone learning what is crawling in SEO.

- Robots.txt MisconfigurationsYour robots.txt file tells search engines which pages to crawl and which to skip. A single typo or incorrect directive can accidentally block important sections of your site, preventing search engines from discovering key content. For example, a misplaced "Disallow" command could hide your entire website from Googlebot, leading to limited visibility and lost traffic. Always double-check your robots.txt for errors and ensure it only blocks pages you truly want to keep private.

- Broken Internal Links (404 Errors)Internal links connect your site’s pages. When these links point to non-existent pages, they return a 404 error. For crawlers, a 404 is a dead end—it stops them from reaching deeper content and wastes valuable crawl budget. Too many broken links signal poor site maintenance and can lead search engines to abandon your site before finding important pages (see details).

- Redirect Chains and LoopsRedirects are used to send visitors (and bots) from one URL to another. However, when redirects chain together (A → B → C) or loop endlessly (A → B → A), crawlers can get stuck or give up entirely. This not only wastes crawl resources but also prevents the final destination page from being indexed. Short, direct redirects are best for both users and search engines.

- Server Errors (5xx Status Codes)A 5xx error means your server couldn’t fulfill a crawler’s request. These errors are often temporary, but frequent 5xx responses tell search engines your site is unreliable. As a result, crawlers may visit less often or stop altogether, leading to limited crawling in SEO and missed opportunities for your content to be indexed.

- Slow Page Load TimesCrawlers have a limited time (crawl budget) to spend on your site. If your pages load slowly, bots can’t cover as much ground before moving on. This means fewer pages get crawled and indexed, especially on large or complex sites. Improving your site’s speed not only helps SEO but also enhances user experience.

Diagnosing Your Website's Crawl Errors

Sounds overwhelming? It doesn’t have to be. Most crawl issues are easy to spot and fix with the right tools. For example, using Google Search Console or a site audit tool can help you quickly identify and address:

- Pages blocked by robots.txt or meta tags

- Broken or orphaned internal links

- Excessive redirect chains

- Server-side errors and slow-loading pages

By regularly auditing your site and fixing these crawl errors, you ensure search engines can access and index all your valuable content. This is the foundation for strong organic visibility and a key part of understanding what is crawl errors in SEO.

Next, let’s look at how you can use Google Search Console to proactively check your site’s crawlability and keep your SEO efforts on track.

How to Check Your Site for Crawlability Issues

Your Guide to the Google Search Console Coverage Report

When you want to know if your website is truly visible to search engines, Google Search Console (GSC) is your go-to toolkit. But where should you start if you suspect crawl errors or want to make sure Googlebot is seeing your most important pages? Let’s walk through a hands-on process using GSC to analyze your site’s crawlability—step by step, even if you’re new to SEO.

- Access the Coverage ReportLog in to Google Search Console and select your property (website). From the left-hand menu, click on Coverage under the Index section. This report gives you a high-level overview of how Googlebot interacts with your site, including which pages are indexed, which have errors, and which are excluded from the index.

- Interpret Statuses: Error, Valid with Warnings, Valid, and ExcludedThe Coverage report categorizes your pages into four main statuses:

- Error: Pages that couldn’t be indexed due to serious issues (like server errors or blocked resources). These require immediate attention.

- Valid with warnings: Pages that are indexed but have minor issues. Review these to prevent future problems.

- Valid: Pages successfully crawled and indexed—these are in good shape.

- Excluded: Pages discovered by Google but not indexed (often due to intentional blocks, canonical tags, or duplicate content). Review these to ensure nothing important is being missed (official source).

You’ll notice that clicking on any status opens a list of affected URLs, so you can investigate issues one by one.

- Use the URL Inspection Tool for Deep DivesWant to check if a specific page is being crawled or indexed? Use the URL Inspection Tool at the top of GSC. Enter your page’s URL, and you’ll instantly see:

- The last crawl date by Googlebot

- Crawl errors or issues with accessibility

- Indexing status (whether the page is in Google’s index or not)

- Any enhancements or structured data detected

If the page isn’t indexed, the tool will suggest reasons—like being blocked by robots.txt or marked as ‘noindex.’ You can also request indexing for updated or new pages directly from this tool.

- Monitor Crawl Activity with the Crawl Stats ReportFor a deeper look at Googlebot’s activity, open the Crawl Stats report (found under Settings in GSC). Here, you’ll see:

- How many requests Googlebot made to your site over time

- Server response codes (200, 404, 500, etc.)

- Average response times and potential availability issues

This report is especially valuable if you’re troubleshooting what is crawl errors in SEO or want to ensure your server isn’t blocking or slowing down Googlebot. If you notice spikes in errors or drops in crawl requests, it’s time to investigate further (see details).

Making Sense of Crawlability Insights

Sounds like a lot? Once you get familiar with these reports, you’ll have a clear window into how Google interacts with your site. Imagine discovering that key product pages aren’t being indexed, or that a technical glitch is blocking Googlebot from crawling your blog posts. By regularly reviewing your Coverage and Crawl Stats reports, and using the URL Inspection Tool for spot checks, you can catch and fix crawl issues before they impact your visibility.

Understanding what is crawling indexing and ranking in SEO goes beyond theory—it’s about using these practical tools to ensure your hard work gets seen. In the next section, we’ll explore advanced strategies to optimize your site for even more efficient crawling and long-term SEO success.

Advanced Strategies to Optimize for Crawling

Proactive Strategies for Better Crawling

After diagnosing crawl issues, you might wonder: how do you move from fixing errors to truly optimizing your site for search engine crawlers? The answer lies in a handful of high-impact strategies that help you make the most of your crawl budget, improve crawl depth, and ensure every important page gets discovered. Let’s break down the most effective techniques for 2025, whether you run a small blog or a sprawling e-commerce platform.

- Optimize Your

robots.txtFileThink of yourrobots.txtfile as the bouncer at the door of your website. It tells search engine crawlers (like Googlebot and Bingbot) which pages or directories they can access and which ones to skip. A well-configuredrobots.txthelps focus your crawl budget on valuable content, preventing bots from wasting time on irrelevant or duplicate pages. For example, you might block internal search results, admin pages, or thank-you pages that offer no SEO value. But be careful—a single misstep can accidentally block your entire site from search engines. Always double-check your directives and test them using tools within Google Search Console or your preferred SEO platform. For large or frequently updated sites, regularly review and update yourrobots.txtto reflect new sections or changes in site structure. - Create and Submit an XML SitemapYour XML sitemap acts as a tour guide for crawlers, providing a clear, organized list of all the important URLs you want search engines to discover. This is especially useful for deep or complex sites where some pages might be buried several clicks from the homepage. Make sure your sitemap includes only high-value pages (like product listings, category pages, or cornerstone blog posts) and excludes thin, duplicate, or low-priority content. Once created, submit your sitemap to Google Search Console and reference its location in your

robots.txtfile. For large or dynamic sites, automate sitemap updates to ensure new content is always discoverable. Specialized sitemaps for images, videos, or news can further boost visibility for multimedia-heavy or news-driven platforms. - Implement a Logical Internal Linking StructureInternal links are like road signs for both users and crawlers. A logical, hierarchical linking structure helps distribute link equity, guides crawlers to your most important pages, and ensures deep pages aren’t left undiscovered. Start by grouping related content into topic clusters—pillar pages covering broad topics, with supporting subpages linked beneath. This not only clarifies your site’s architecture but also improves crawl depth, making it easier for bots to reach content several layers deep. Regularly audit your site to fix broken links and update older, high-authority pages with links to new content. For large sites, use category pages and breadcrumbs to create additional pathways, and ensure paginated series (like blog archives or product listings) are linked in a way that exposes all relevant URLs to crawlers.

- Improve Site Speed and Technical PerformanceCrawlers have a limited amount of time and resources to spend on each site. If your pages load slowly or require complex JavaScript rendering, bots may crawl fewer pages before moving on. Optimize images, leverage browser caching, and minimize unnecessary scripts to boost speed. For JavaScript-heavy sites, consider dynamic rendering or pre-rendering solutions that serve a simplified HTML version to bots, ensuring all critical content is accessible and crawlable. Fast-loading, technically sound sites not only get crawled more efficiently but also offer users a better experience—an SEO win-win.

- Address Duplicate Content and Pagination IssuesDuplicate or near-duplicate pages can waste crawl budget and dilute ranking signals. Use canonical tags to point search engines to your preferred version of a page, especially in paginated series or when URL parameters generate multiple versions of similar content. For large e-commerce or content sites, handle pagination carefully to avoid trapping crawlers in endless loops or missing key product pages. Combine noindex or disallow directives with smart internal linking to focus crawl resources on your highest-value pages.

- Leverage Structured Data and BreadcrumbsStructured data (schema markup) helps search engines understand the context and relationships between your pages. Breadcrumbs, in particular, provide a clear hierarchical path, making it easier for crawlers to map your site’s architecture and prioritize important sections. Implementing these elements not only improves crawl efficiency but can also enhance your search listings with rich snippets, attracting more clicks.

Optimizing Your Site Architecture for Spiders

Imagine your website as a city and crawlers as tourists with limited time. The easier you make it for them to find main attractions (your key pages), the more likely they are to visit—and recommend—those spots. Here are a few extra tips:

- Keep important pages within three clicks of the homepage to maximize crawl depth efficiency.

- Regularly audit your site for orphan pages (pages with no internal links) and connect them to relevant sections.

- Use clear, descriptive anchor text for internal links so crawlers (and users) understand what to expect.

- Monitor your crawl stats and adjust strategies as your site grows or changes, ensuring that your most valuable content always stays front and center for both users and search engine bots.

By proactively implementing these advanced strategies, you’ll make your site a welcoming, easily navigable place for search engine crawlers. This not only maximizes your crawl budget but also lays the groundwork for better indexing, higher rankings, and sustainable SEO success. Next, let’s bring everything together and discuss how to ensure your content is always crawl-ready in the evolving digital landscape.

Ensuring Your Content is Always Crawl-Ready

Bringing It All Together: Crawling as the Bedrock of SEO

When you step back and look at the full SEO process, you’ll notice that everything starts with one crucial step: crawling. Without successful crawling, even the best content or most innovative website design becomes invisible to search engines. Think of it like building a beautiful storefront in a hidden alley—if no one can find it, it won’t attract visitors. That’s why understanding what is crawling in SEO and how it underpins every other aspect of search visibility is essential for anyone serious about digital growth in 2025.

Throughout this guide, we’ve explored the journey from crawling to indexing, and how technical factors and site structure can either open doors or create roadblocks for search engine bots. But there’s another layer to consider: the quality and organization of your content itself. When your pages are well-structured, easy to navigate, and free from technical errors, you make it simple for crawlers to access and understand your site. This clarity not only improves your chances of ranking but also enhances user experience—a win-win for both audiences.

The Future of Crawl-Friendly Content Creation

Sounds like a lot to manage? It can be, especially as websites grow in size and complexity. That’s where modern content creation tools step in to bridge the gap. For teams looking to scale up without sacrificing SEO best practices, solutions like BlogSpark offer a streamlined way to produce structured, crawl-friendly articles. By leveraging AI-powered outlines, keyword optimization, and built-in checks for technical SEO, you can ensure every new post is immediately ready for discovery by search engines.

Imagine never having to worry if your latest blog post is missing from Google, or if technical errors are holding back your best content. With the right system in place, you can focus on what matters most: delivering value to your audience and growing your brand’s online presence. Whether you’re a solo blogger or part of a large marketing team, making crawl optimization a routine part of your workflow sets the foundation for long-term SEO success.

Great SEO starts with great content architecture, making your site a welcoming place for search engine crawlers.

As you move forward, remember that the basics of what is crawling in SEO are timeless: make it easy for both users and bots to find, access, and understand your content. By staying proactive with site audits, technical fixes, and high-quality content creation, you’ll ensure your website remains visible and competitive in a rapidly evolving digital landscape.

Frequently Asked Questions

1. What is crawling in SEO and why is it important?

Crawling in SEO refers to the process where search engine bots systematically browse websites to discover new or updated content. This step is essential because, without crawling, your pages cannot be indexed or ranked in search results, making them invisible to users searching online.

2. How does crawling differ from indexing in SEO?

Crawling is the discovery phase where bots find web pages by following links, while indexing is the process of analyzing and storing those pages in the search engine's database. Only indexed pages can appear in search results, so both processes are crucial for SEO success.

3. What factors affect a website's crawl budget?

A website's crawl budget is influenced by factors such as site speed, server reliability, internal linking structure, content quality, and overall site authority. Healthy, well-structured sites tend to receive a higher crawl budget, ensuring more pages are discovered and indexed.

4. How can I identify and fix crawl errors on my website?

You can use Google Search Console to access the Coverage report, which highlights crawl errors like blocked pages, broken links, or server issues. Regularly reviewing these reports and resolving errors—such as fixing robots.txt misconfigurations or repairing broken links—helps maintain search visibility.

5. What are the best strategies to optimize a website for crawling?

To optimize for crawling, focus on creating a clean robots.txt file, submitting an XML sitemap, building a logical internal linking structure, improving site speed, and addressing duplicate content. Using tools like BlogSpark can help ensure your content is structured and crawl-friendly from the start.